See all your cloud security risks. Close exposures fast.

Gain unmatched risk context—from your full cloud stack—to protect multi-cloud and hybrid cloud environments

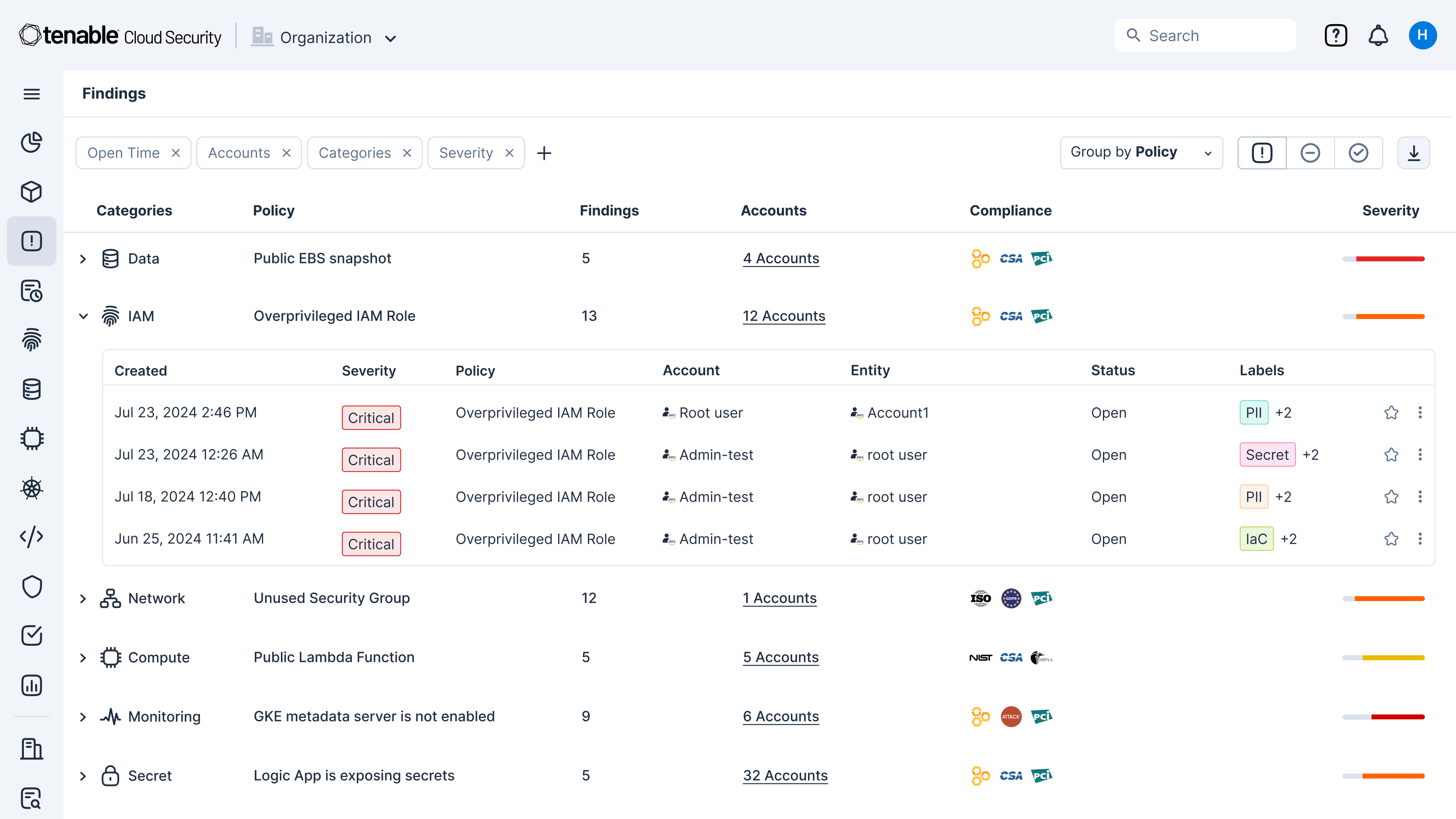

Quickly identify cloud exposures with full context

Secure your cloud from the four big risks: misconfigurations, vulnerabilities, unsecured identities and vulnerable sensitive data. Expose toxic combinations of these risks to prioritize critical remediation steps.

Unify visibility for multi-cloud resources

Get a unified inventory of all your cloud assets. From development to runtime, continuously discover resources across your cloud and hybrid environments including infrastructure, workloads, AI resources, identities, containers, Kubernetes and infrastructure as code (IaC).

Gain a 360-degree view of risk

Use integrated cloud security tools to find vulnerabilities, misconfigurations and excessive permissions across your cloud environments. Use Tenable’s Vulnerability Priority Rating scores to focus on the critical risks across your multi-cloud and on-prem attack surface. Prioritize risks that matter most while enforcing the principles of zero trust and least privilege, including through just-in-time (JIT) access.

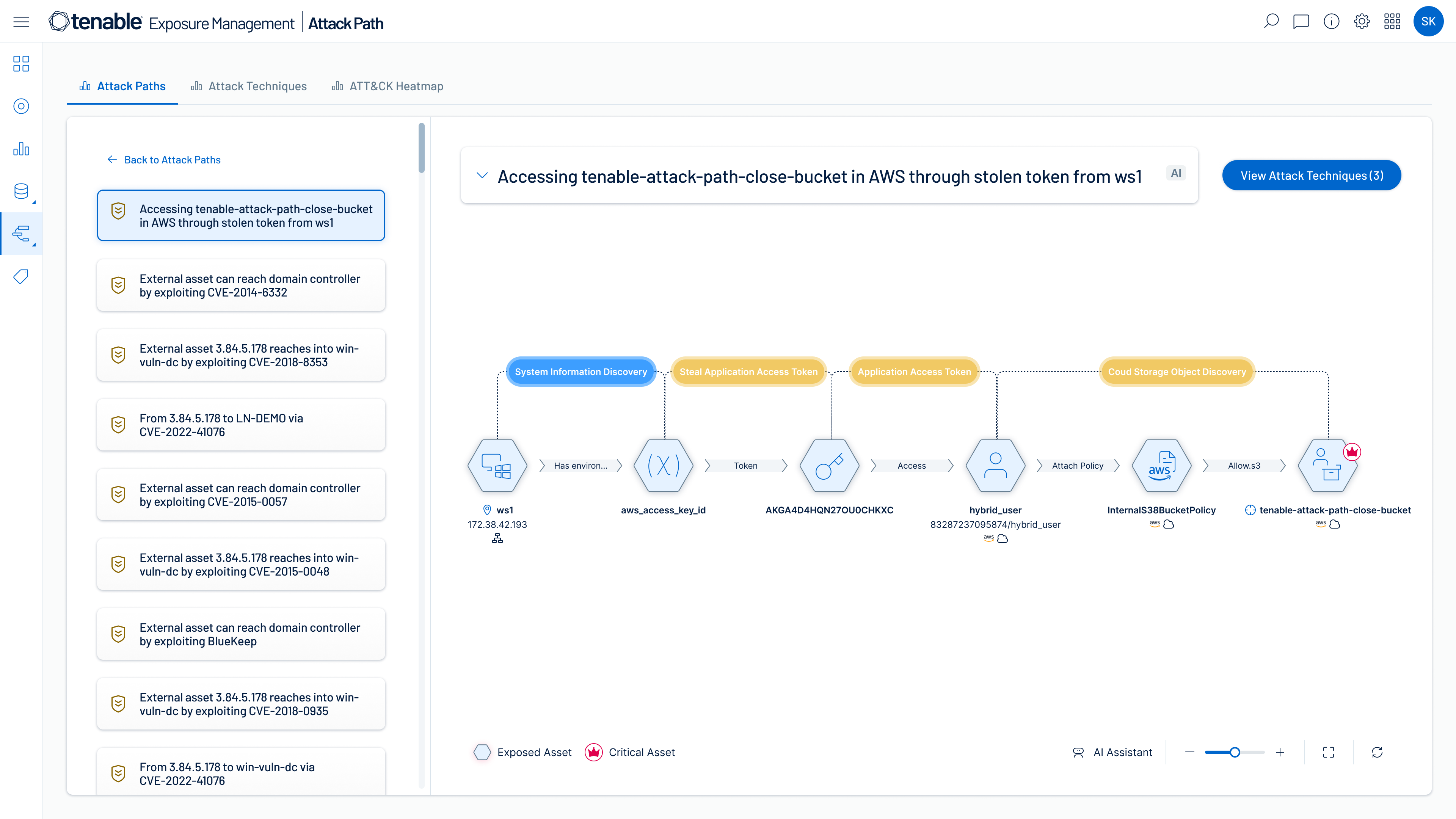

Identify high-risk attack paths

Map complex asset, identity and risk relationships to uncover attack paths that traverse hybrid cloud environments. Prioritize remediation of choke points that disrupt attack paths before breaches begin.

Find, prioritize and reduce cloud security risks with accuracy and confidence

When you choose Tenable Cloud Security as part of the Tenable One Exposure Management Platform — in addition to getting deep insight into all your cloud resources, identities and risks — you can extend exposure management to secure your entire attack surface including multi-cloud and hybrid cloud environments.

CWP

Vulnerability management across all running cloud workloads

CSPM

Compliance and governance for the full cloud stack

CIEM

Least-privilege access and zero trust

DSPM

Discovery and classification of data and risk prioritization

IAC

Infrastructure as Code security and DevOps workflow support

AI-SPM

Visibility and security for AI workloads, services and data used in training and inference

CDR

Service and host activity analysis

KSPM

Container security and compliance in the cloud and on-prem

Accelerate search, insight and action with generative AI that uncovers hidden risks and amplifies security expertise across your environment.

Leverage the world’s largest repository of asset, exposure, and threat context that powers ExposureAI’s unparalleled insights.

Organize data to reduce redundancy and improve integrity, consistency and efficiency.

Enrich data with additional insights, making it more actionable and useful.

Identify and surface critical relationship context to core business services and functions.

Grasp the state of cloud and AI security

The new State of Cloud and AI Security 2025 report, commissioned by Tenable in partnership with CSA, delivers critical insights into evolving security trends and organizational readiness.

It was designed to uncover how organizations are adapting their strategies, prioritizing risk, and measuring progress across key areas:

- Evolving Security Response

- Securing the Cloud Stack

- Risk & Barriers

- Leadership Alignment

- AI in Focus

Explore the survey to get ahead of the curve and align your security program with the industry's evolving knowledge, attitudes, and opinions on cloud and AI security trends.

Read the reportEliminate cloud blind spots with advanced exposure management capabilities

Tenable Cloud Security now available through GSA OneGov

GSA and Tenable OneGov agreement provides Tenable Cloud Security FedRAMP at substantial cost savings for federal agencies.

Learn more about Tenable Cloud Security

Using [Tenable Cloud Security] automation allowed us to eliminate exhaustive manual processes and perform in minutes what would have taken two or three security people months to accomplish.

- Tenable Cloud Security